Flutter Text & Barcode Scanner App with Firebase ML Kit

Published at Jun 11, 2018

ML Kit is a collection of powerful machine learning API released to the public by Google at IO 18 under the Firebase brand. It comes with a set of ready to use APIs such as text recognition, barcode scanning, face detection, image labelling, landmark recognition that can be used simply by developers without knowledge of Machine Learning.

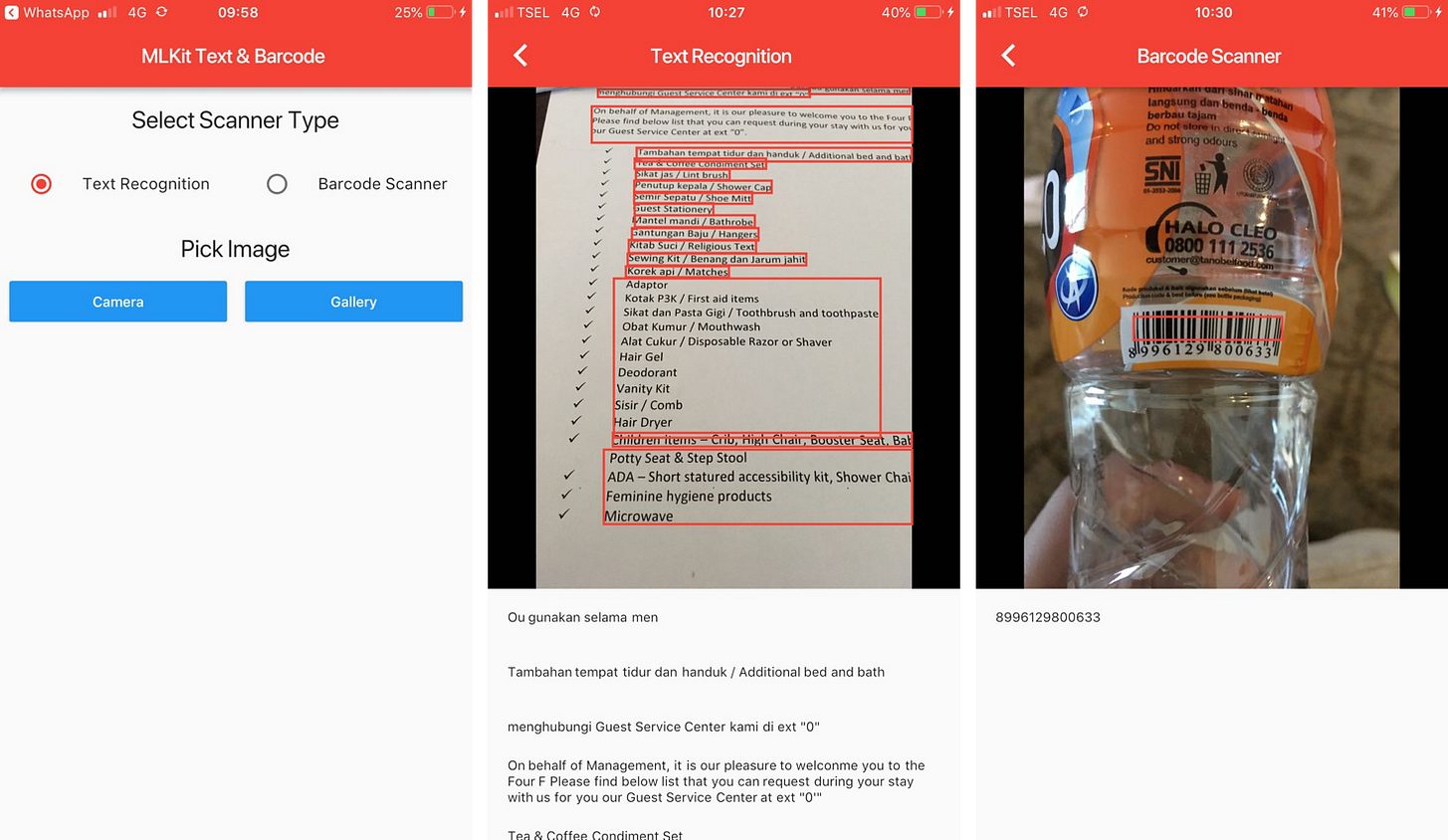

In this article, we will use text recognition and barcode scanning to build a Flutter app where user can pick image from camera or gallery and then uses MLKit to detect the text or barcode from the image. The app will then display the results of the image detection and provide the result as a text or metadata displayed to the user.

What we will build:

- Main Widget is the screen where user can select the type of scanner to use and image picker from camera or gallery.

- Detail Widget is the screen where the image detection result is performed and displayed to user.

Third Party Dependencies Integration

Firebase does not have official SDK to use ML Kit for Flutter. Luckily we can use the mlkit library by azihsoyn that provide platform channel implementation for iOS and Android ML Kit SDK. To use it we need to create Firebase Project for Android and iOS with the same applicationId and bundle identifier as our app, then import the json and plist into the Flutter Android and iOS project directory.

To get image from the Camera we will use the official image_picker library. This plugin provides support to take image from camera or select image from gallery for both iOS and Android.

name: flutter_mlkit_text_barcode

description: A new Flutter project.

dependencies:

flutter:

sdk: flutter

cupertino_icons: ^0.1.2

path_provider: 0.3.0

mlkit: ^0.2.2

image_picker: ^0.4.4

dev_dependencies:

flutter_test:

sdk: flutter

flutter:

uses-material-design: true

Main Screen Widget

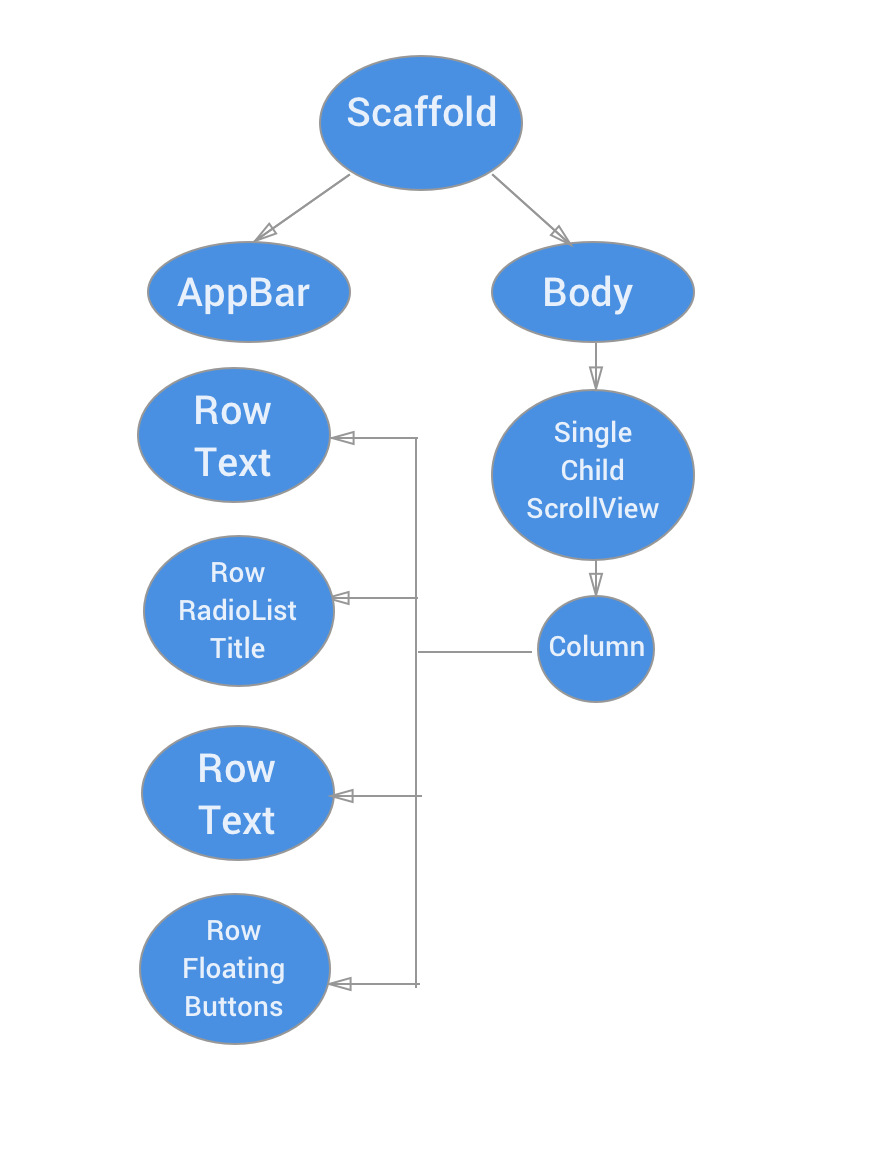

The main screen widget tree diagram:

Row containing RadioListTitle is used so user can select whether to use text recognition and barcode scanner, the value is stored in _selectedScanner instance variable.

Row containing FloatingButton provides the interaction for user to select whether to pick image by taking picture using the platform specified camera app or by selecting image from user photo library. After user picked the image, we store the gile and use the Navigation class to push the DetailScreen widget passing the file as a constructor.

import 'package:flutter/material.dart';

import 'package:image_picker/image_picker.dart';

import 'dart:io';

import 'package:credit_card/detail.dart';

const String TEXT_SCANNER = 'TEXT_SCANNER';

const String BARCODE_SCANNER = 'BARCODE_SCANNER';

class Home extends StatefulWidget {

Home({Key key}) : super(key: key);

@override

State<StatefulWidget> createState() => _HomeState();

}

class _HomeState extends State<Home> {

static const String CAMERA_SOURCE = 'CAMERA_SOURCE';

static const String GALLERY_SOURCE = 'GALLERY_SOURCE';

final GlobalKey<ScaffoldState> _scaffoldKey = GlobalKey<ScaffoldState>();

File _file;

String _selectedScanner = TEXT_SCANNER;

@override

Widget build(BuildContext context) {

final columns = List<Widget>();

columns.add(buildRowTitle(context, 'Select Scanner Type'));

columns.add(buildSelectScannerRowWidget(context));

columns.add(buildRowTitle(context, 'Pick Image'));

columns.add(buildSelectImageRowWidget(context));

// if (_file == null) {

// columns.add(buildRowTitle(context, 'Pick Image'));

// columns.add(buildSelectImageRowWidget(context));

// } else {

// columns.add(buildImageRow(context, _file));

// columns.add(buildDeleteRow(context));

// }

return Scaffold(

key: _scaffoldKey,

appBar: AppBar(

centerTitle: true,

title: Text('MLKit Text & Barcode'),

),

body: SingleChildScrollView(

child: Column(

children: columns,

) ,

)

);

}

Widget buildRowTitle(BuildContext context, String title) {

return Center(

child: Padding(

padding: EdgeInsets.symmetric(

horizontal: 8.0,

vertical: 16.0

),

child: Text(title,

style: Theme.of(context).textTheme.headline,),

)

);

}

Widget buildSelectImageRowWidget(BuildContext context) {

return Row(

children: <Widget>[

Expanded(

child: Padding(

padding: EdgeInsets.symmetric(horizontal: 8.0),

child:

RaisedButton(

color: Colors.blue,

textColor: Colors.white,

splashColor: Colors.blueGrey,

onPressed: () {

onPickImageSelected(CAMERA_SOURCE);

},

child: const Text('Camera')

),

)

),

Expanded(

child: Padding(

padding: EdgeInsets.symmetric(horizontal: 8.0),

child:

RaisedButton(

color: Colors.blue,

textColor: Colors.white,

splashColor: Colors.blueGrey,

onPressed: () {

onPickImageSelected(GALLERY_SOURCE);

},

child: const Text('Gallery')

),

)

)

],

);

}

Widget buildSelectScannerRowWidget(BuildContext context) {

return Row(

children: <Widget>[

Expanded(

child: RadioListTile<String>(

title: Text('Text Recognition'),

groupValue: _selectedScanner,

value: TEXT_SCANNER,

onChanged: onScannerSelected,

)

),

Expanded(

child: RadioListTile<String>(

title: Text('Barcode Scanner'),

groupValue: _selectedScanner,

value: BARCODE_SCANNER,

onChanged: onScannerSelected,

),

)

],

);

}

Widget buildImageRow(BuildContext context, File file) {

return SizedBox(

height: 500.0,

child: Image.file(file, fit: BoxFit.fitWidth,)

);

}

Widget buildDeleteRow(BuildContext context) {

return Center(

child: Padding(

padding: EdgeInsets.symmetric(horizontal: 8.0, vertical: 8.0),

child: RaisedButton(

color: Colors.red,

textColor: Colors.white,

splashColor: Colors.blueGrey,

onPressed: () {

setState(() {

_file = null;

});;

},

child: const Text('Delete Image')

),

),

);

}

void onScannerSelected(String scanner) {

setState(() {

_selectedScanner = scanner;

});

}

void onPickImageSelected(String source) async {

var imageSource;

if (source == CAMERA_SOURCE) {

imageSource = ImageSource.camera;

} else {

imageSource = ImageSource.gallery;

}

final scaffold = _scaffoldKey.currentState;

try {

final file =

await ImagePicker.pickImage(source: imageSource);

if (file == null) {

throw Exception('File is not available');

}

Navigator.push(

context,

new MaterialPageRoute(builder: (context) => DetailWidget(file, _selectedScanner)),

);

} catch(e) {

scaffold.showSnackBar(SnackBar(content: Text(e.toString()),));

}

}

}

Image Detection with ML Kit and Display Result in Detail Widget

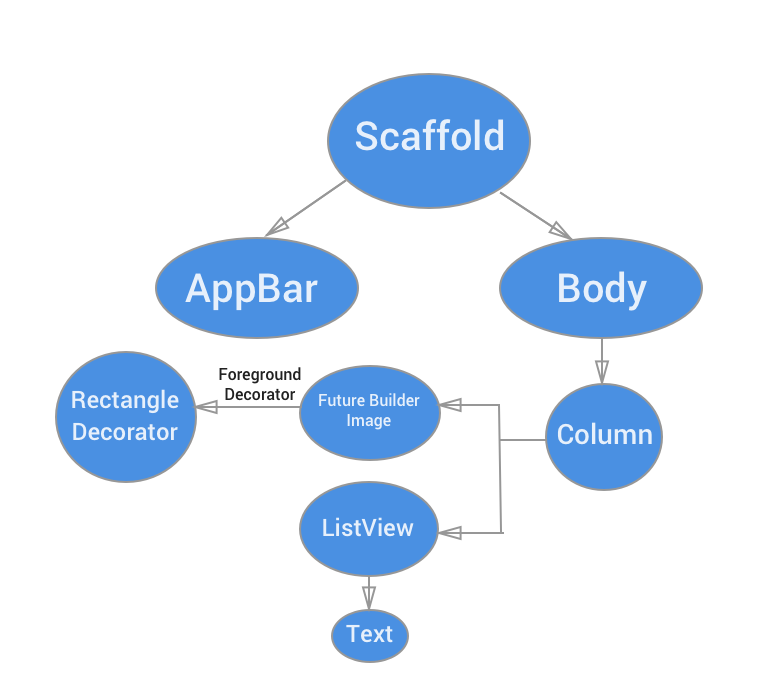

The detail screen widget tree diagram:

DetailScreen widget accepts 2 parameters, File of the image and String based on scanner type constant so the widget knows whether to use TextDetector or BarcodeDetector to detect the image from the filepath. Singleton of FirebaseVisionTextDetector and FirebaseVisonBarcodeDetector from mlkit library is stored as instance variables. Both the result array for text and barcode is also stored as properties use to build the widget and the ListView.

When Detail widget is initialized, we call the FirebaseVisionDetector to detect the image from the filepath using async await, then we store the result of the detection respectively inside the instance variable based on the type of scanner to use (Text Recognition or Barcode Scanner).

We use FutureBuilder widget that builds itself based on latest snapshot from a Future object. In this case we will invoke the _getImageSize method passing the file of the image that will return the size of the image as Future. In the builder block, we check if the snapshot passed has the data of the image size, then we render Image widget and add ForegroundDecorator widget that will draw red rectangle of detected text or barcode inside the Image widget detected using BoxPainter.

To display the result of the detected text or barcode, ListView.Builder is used to build the item dynamically. The data will be populated based on the result from the array that is updated when the FirebaseVisionDetector detect from the filepath and stored in property. Each item will output the VisionResult text.

import 'package:flutter/material.dart';

import 'dart:io';

import 'dart:async';

import 'package:mlkit/mlkit.dart';

import 'package:credit_card/home.dart';

class DetailWidget extends StatefulWidget {

File _file;

String _scannerType;

DetailWidget(this._file, this._scannerType);

@override

State<StatefulWidget> createState() {

return _DetailState();

}

}

class _DetailState extends State<DetailWidget> {

FirebaseVisionTextDetector textDetector = FirebaseVisionTextDetector.instance;

FirebaseVisionBarcodeDetector barcodeDetector = FirebaseVisionBarcodeDetector.instance;

List<VisionText> _currentTextLabels = <VisionText>[];

List<VisionBarcode> _currentBarcodeLabels = <VisionBarcode>[];

@override

void initState() {

super.initState();

Timer(Duration(milliseconds: 1000), () {

this.analyzeLabels();

});

}

void analyzeLabels() async {

try {

var currentLabels;

if (widget._scannerType == TEXT_SCANNER) {

currentLabels = await textDetector.detectFromPath(widget._file.path);

setState(() {

_currentTextLabels = currentLabels;

});

} else {

currentLabels = await barcodeDetector.detectFromPath(widget._file.path);

setState(() {

_currentBarcodeLabels = currentLabels;

});

}

} catch (e) {

print(e.toString());

}

}

@override

Widget build(BuildContext context) {

return Scaffold(

appBar: AppBar(

centerTitle: true,

title: Text(widget._scannerType == TEXT_SCANNER ? 'Text Recognition' : 'Barcode Scanner'),

),

body: Column(children: <Widget>[

buildImage(context),

widget._scannerType == TEXT_SCANNER ? buildTextList(_currentTextLabels) : buildBarcodeList(_currentBarcodeLabels)

],));

}

Widget buildImage(BuildContext context) {

return

Expanded(

flex: 2,

child: Container(

decoration: BoxDecoration(

color: Colors.black

),

child: Center(

child: widget._file == null

? Text('No Image')

: FutureBuilder<Size>(

future: _getImageSize(Image.file(widget._file, fit: BoxFit.fitWidth)),

builder: (BuildContext context, AsyncSnapshot<Size> snapshot) {

if (snapshot.hasData) {

return Container(

foregroundDecoration:

(widget._scannerType == TEXT_SCANNER) ? TextDetectDecoration(_currentTextLabels, snapshot.data) : BarcodeDetectDecoration(_currentBarcodeLabels, snapshot.data),

child: Image.file(widget._file, fit: BoxFit.fitWidth));

} else {

return CircularProgressIndicator();

}

},

),

)

),

);

}

Widget buildBarcodeList(List<VisionBarcode> barcodes) {

if (barcodes.length == 0) {

return Expanded(

flex: 1,

child: Center(

child: Text('No barcode detected', style: Theme.of(context).textTheme.subhead),

),

);

}

return Expanded(

flex: 1,

child: Container(

child: ListView.builder(

padding: const EdgeInsets.all(1.0),

itemCount: barcodes.length,

itemBuilder: (context, i) {

final barcode = barcodes[i];

var text = "Raw Value: ${barcode.rawValue}";

return _buildTextRow(text);

}),

),

);

}

Widget buildTextList(List<VisionText> texts) {

if (texts.length == 0) {

return Expanded(

flex: 1,

child: Center(child: Text('No text detected', style: Theme.of(context).textTheme.subhead),

));

}

return Expanded(

flex: 1,

child: Container(

child: ListView.builder(

padding: const EdgeInsets.all(1.0),

itemCount: texts.length,

itemBuilder: (context, i) {

return _buildTextRow(texts[i].text);

}),

),

);

}

Widget _buildTextRow(text) {

return ListTile(

title: Text(

"$text",

),

dense: true,

);

}

Future<Size> _getImageSize(Image image) {

Completer<Size> completer = Completer<Size>();

image.image.resolve(ImageConfiguration()).addListener(

(ImageInfo info, bool _) => completer.complete(

Size(info.image.width.toDouble(), info.image.height.toDouble())));

return completer.future;

}

}

/*

This code uses the example from azihsoyn/flutter_mlkit

https://github.com/azihsoyn/flutter_mlkit/blob/master/example/lib/main.dart

*/

class BarcodeDetectDecoration extends Decoration {

final Size _originalImageSize;

final List<VisionBarcode> _barcodes;

BarcodeDetectDecoration(List<VisionBarcode> barcodes, Size originalImageSize)

: _barcodes = barcodes,

_originalImageSize = originalImageSize;

@override

BoxPainter createBoxPainter([VoidCallback onChanged]) {

return _BarcodeDetectPainter(_barcodes, _originalImageSize);

}

}

class _BarcodeDetectPainter extends BoxPainter {

final List<VisionBarcode> _barcodes;

final Size _originalImageSize;

_BarcodeDetectPainter(barcodes, originalImageSize)

: _barcodes = barcodes,

_originalImageSize = originalImageSize;

@override

void paint(Canvas canvas, Offset offset, ImageConfiguration configuration) {

final paint = Paint()

..strokeWidth = 2.0

..color = Colors.red

..style = PaintingStyle.stroke;

final _heightRatio = _originalImageSize.height / configuration.size.height;

final _widthRatio = _originalImageSize.width / configuration.size.width;

for (var barcode in _barcodes) {

final _rect = Rect.fromLTRB(

offset.dx + barcode.rect.left / _widthRatio,

offset.dy + barcode.rect.top / _heightRatio,

offset.dx + barcode.rect.right / _widthRatio,

offset.dy + barcode.rect.bottom / _heightRatio);

canvas.drawRect(_rect, paint);

}

canvas.restore();

}

}

class TextDetectDecoration extends Decoration {

final Size _originalImageSize;

final List<VisionText> _texts;

TextDetectDecoration(List<VisionText> texts, Size originalImageSize)

: _texts = texts,

_originalImageSize = originalImageSize;

@override

BoxPainter createBoxPainter([VoidCallback onChanged]) {

return _TextDetectPainter(_texts, _originalImageSize);

}

}

class _TextDetectPainter extends BoxPainter {

final List<VisionText> _texts;

final Size _originalImageSize;

_TextDetectPainter(texts, originalImageSize)

: _texts = texts,

_originalImageSize = originalImageSize;

@override

void paint(Canvas canvas, Offset offset, ImageConfiguration configuration) {

final paint = Paint()

..strokeWidth = 2.0

..color = Colors.red

..style = PaintingStyle.stroke;

final _heightRatio = _originalImageSize.height / configuration.size.height;

final _widthRatio = _originalImageSize.width / configuration.size.width;

for (var text in _texts) {

final _rect = Rect.fromLTRB(

offset.dx + text.rect.left / _widthRatio,

offset.dy + text.rect.top / _heightRatio,

offset.dx + text.rect.right / _widthRatio,

offset.dy + text.rect.bottom / _heightRatio);

canvas.drawRect(_rect, paint);

}

canvas.restore();

}

}

Conclusion

We have build Flutter Application that is able to use the Firebase ML Kit into our project to detect text and barcode in image. Flutter provides flexibility for developer to call native Android and iOS library and SDK using the Platform Specific Channel API and use it in Flutter App. While Firebase ML Kit is still in beta, the result of the image detection already look very good and promising. While in this project we use on local device image detection, Firebase also provides on cloud image detection to detect images. Stay tuned and happy Fluttering!